How To Efficiently Load Data Into Postgres Using Python

To efficiently load data into postgres database using python, I will recommend two methods in this article. They are efficient, easy to implement and require less coding. These methods are the use of SQLAlchemy and the other entails using psycopg2 package only. Let’s dive into them.

While this article can be a standalone, it is important you know where we started. The data extraction stage is found here 👉 Beginner Friendly ETL Project with Python, Postgres, Power BI while the transformation stage 👉 Easily Transform Data Using Pandas and Regular Expression.

SQLAlchemy

This is a very unique and simple way to load csv data into postgres database.

The syntax above is self explained. To use this, you need to import the module and instantiate the engine.

from sqlalchemy import create_engine

engine=create_engine("postgresql+psycopg2://postgres:xxxx@localhost:5432/postgres")

Notice how the dialect is in sync with the driver. As soon as the engine is created, you use pandas’ to_sql() method to create the table and populate the database with the data in csv file.

Another method of importing data into postgres is using the pgadmin. This is purely a gui method and I will not recommend this method. In today’s stack, you want to as much as possible automate processes so as to remove or reduce human error.

Psycopg2

This is another recommended way to load csv data into postgres database. I will walk you through how I used this method to upload the ebay dataset into postgres database.

Psycopg2 is a python open source package that implements the postgres protocol. Essentially, psycopg2 is to postgres what sqlite3 is to sqlite.

Importing Packages and Modules

To use this package, we need to install it and then import it into our IDE and the os module.

import os import psycopg2

Here the job of the os is to help call the password and username I saved in my local environment variable.

Declaring The Connection Variables

pwd = os.environ['PASSWORD'] user = os.environ['USERNAME1'] database = 'ebay_laptops' hostname = 'localhost' port_id = 5432

It is good practice to hide sensitive data such as password, username, api key. Some of the ways to do this is using an environment variable, config file or the host/remote server.

The database name I created is ‘ebay_laptops’ (You need to manually create the database in pgadmin or use execute method to do so), the hostname is localhost which is my computer and postgres general port which is 5432.

The Script

conn = None

cur = None

try:

conn = psycopg2.connect(

host = hostname,

dbname = database,

user = user,

password = pwd,

port = port_id)

cur = conn.cursor()

cur.execute('DROP TABLE IF EXISTS laptops')

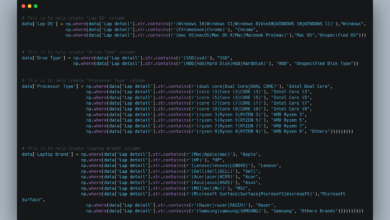

create_script = ''' CREATE TABLE IF NOT EXISTS laptops (

Lap_detail varchar(300) NOT NULL,

laptop_state varchar(50),

laptop_price varchar(50),

drive_size varchar(50),

ram varchar(50),

ram_size varchar(10),

laptop_os varchar(50),

lap_os varchar(50),

drive_type varchar(50),

processor_type varchar(50),

laptop_brands varchar(50)) '''

cur.execute(create_script)

with open('laptops.csv', 'r', encoding="mbcs") as f:

next(f) #This skips the header row.

cur.copy_from(f, 'laptops', sep=',')

conn.commit()

except Exception as error:

print(error)

finally:

if cur is not None:

cur.close()

if conn is not None:

conn.close()

Explaining The Code

First, I created an instance of the postgres connection class, save it in a conn variable. Used cursor() method of the Connection class to create a cursor object, then use the execute() method to drop the table name if it exists, then execute the script that creates the table.

Next code will look for the directory where I saved the csv file, read the file, encode it in multibyte character and save it as f.

next(f) is used to skip the header row. For csv files, the first row is their header. Use the copy_from() in the Cursor to copy the data into a table named ‘Laptops’ with each column indicated by the separator comma.

Notice that when creating the table columns, I used only varchar, this is to accommodate all types of datatypes. The reason was that I intend converting the columns to appropriate types and perform the final cleaning with Power Query in Power BI.

You could do this with pandas(recommended way) or sql, but I chose to handle this part with power query.

Lastly all these codes are wrapped in a try and except block to catch and print any error. In the finally block, I closed the connection. It is a good practice to always close any database connection you opened.

Conclusion

In this article, you have learnt how to efficiently load data into postgres database using python. The two methods will effectively upload your csv file.

It is important you visit the psycopg2 documentation for a complete method as it affects your dataset and the problem you want to solve.

Join me to the last part of this report where I will be discussing how I analyzed, built reports for the CEO of Global Sygnature and present our recommendation.

2 Comments