Easily Load Data Into Google Cloud Storage Using Python

While Google Cloud Storage, Amazon S3 and Azure Blob all made it easy to create buckets and load files into them through their easy to use interfaces, this article is a beginner friendly step by step approach on how to easily load data into Google Cloud Storage using python.

It is worth noting that when building a pipeline, you would most likely want to automate the workflow, graphic user interfaces are not the best.

This article is divided into the following sections:

💡Introduction to Cloud Storage

💡Functionalities and Capacity of cloud storage

💡Prerequisite

💡How to create a bucket in cloud storage

💡How to load files into a cloud storage bucket

💡 Conclusion

Introduction To Google Cloud Storage

Google cloud storage is a RESTful online file storage web service for storing and accessing data on Google infrastructure. Cloud storage is a scalable , fully-managed, highly reliable and cost efficient object/blob store. Now, let’s look at some of the functionalities and capacity of cloud storage.

Functionality and Capacity of Google Cloud Storage

To simply put, it is a service that allows you to store and retrieve any file type and any file size. GCS is to GCP what S3 is to AWS and what Azure Blob is to Azure. Google cloud storage is designed with eleven 9s of durability. That is 99.999999999% durable.

It is practically infinitely scalable, yes, it can accommodate any size of company and their data. Before we go to creating a bucket, let’s find out some prerequisite you will need to have.

Prerequisite

- Basic knowledge of python

- Account with Google Cloud Platform (Your gmail account is enough)

- Service Account with orner access. Wait 5minutes to populate.

- Create and download key which is a json file.(put the file in the same folder as your main python files)

How to Create a Bucket in Cloud Storage

Just like in other cloud platforms, GCP gives your a beautiful interface to create a bucket, however, as I mentioned earlier, when building a pipeline, your company will likely want the flow to be automated.

This is the reason creating the bucket using any of the supported programming languages or terraform is preferred.

The Project Structure

Here we have the folder cloud_storage with four other folders in it. I used .gitignore to state files I wouldn’t want to commit to my repository. This is a good practice since I have my GCP authentication file.

Load_file.py houses the function that I used to upload the dataset/object. The main.py houses the function that I used to create the bucket on cloud storage. Lastly serviceKeyGoogle.json is the file that I downloaded when I created a service account in GCP.

📝 You can name your authentication file anything you want. Remember is it already a .json file

The Python Code that Easily Created the Bucket

import os

from google.cloud import storage

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = r"C:\Users\kings\Documents\projects\cloud_storage\serviceKeyGoogle.json"

def create_bucket_class_location(bucket_name):

"""

Create a new bucket in the US region with the coldline storage

class

"""

# bucket_name = "your-new-bucket-name"

storage_client = storage.Client()

bucket = storage_client.bucket(bucket_name)

bucket.storage_class = "COLDLINE"

new_bucket = storage_client.create_bucket(bucket, location="us")

print(

"Created bucket {} in {} with storage class {}".format(

new_bucket.name, new_bucket.location, new_bucket.storage_class

)

)

return new_bucket

create_bucket_class_location('ebay-products-prices')

create_bucket_class_location('my-ebay-laptop-prices')

Code Explanation

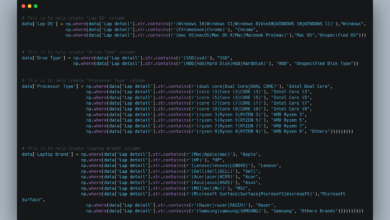

Here , I imported os which enables me reference the google credential file path, then from google.cloud import storage class for creating bucket and storing object into it.

The code snippet is a simple function that takes an input that is the bucket name. In it I instantiated the Client class in storage, saved it in storage_client variable, then bucket method which takes the bucket name_name. Saved that in a variable named bucket.

This enables me to chain the storage_class as “COLDLINE”. Next, I called the create_bucket method in storage_client. This method takes the bucket and the region and saves it in a variable new_bucket.

Next is to print on the bucket name, region and the storage class. Finally return the new_bucket.

The last two lines of code are where I called the functions which created two different buckets.

That’s the importance of a function, code reusability.

📝 The function structure used in the creation of the bucket is from the official Google Cloud Storage documentation. I cannot stop mentioning how important the official documentation is. It is the single source of truth. Every other information you have out there is just a work of art and creativity.

How to Load Files into a Cloud Storage Bucket

I will go straight to explaining the code snippet.

from google.cloud import storage

import os

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = r"C:\Users\kings\Documents\projects\cloud_storage\serviceKeyGoogle.json"

def upload_blob(bucket_name, source_file_name, destination_blob_name):

"""Uploads a file to the bucket."""

# The ID of your GCS bucket

# bucket_name = "your-bucket-name"

# The path to your file to upload

# source_file_name = "local/path/to/file"

# The ID of your GCS object

# destination_blob_name = "storage-object-name"

storage_client = storage.Client()

bucket = storage_client.bucket(bucket_name)

blob = bucket.blob(destination_blob_name)

blob.upload_from_filename(source_file_name)

print(

f"File {source_file_name} uploaded to {destination_blob_name}."

)

source_file_name = "../ebay_prices/ebay_laptops.csv"

bucket_name = "my-ebay-laptop-prices"

destination_blob_name = "my_ebay_laptops.csv"

upload_blob(bucket_name, source_file_name, destination_blob_name)

Code Explanation

Again this is a function that takes bucket name, source file and the destination name. This means you can give the file name in the bucket a different name from the name it originally had in your local computer.

Before calling the function I first created a variable that holds the three arguments and the output is the code below.

The dataset I used was the same I scrapped from ebay.co.uk and you can find my article on how I scrapped the data here. Again the function that powered this upload was pulled from the GCP documentation

Conclusion

In this article, you have learnt what Google cloud storage is, how to create buckets in cloud storage using python and how to easily load data into Google Cloud Storage using python. The next part of this project is to explore another important functionality of Google Cloud Storage, which is retrieving data using python and loading it into Google Bigquery.