Beginner Friendly ETL Project with Python, Postgres, Power BI

As my workflow and learning process has always been. Learn ===> Apply ===> Grow. You can easily break into this industry with these stack.

Building a real time industry standard project mostly requires that you are with a company. However, there are some data science or data analytic projects online that can give you an idea of what it is like to build for a company.

In this project I built an etl pipeline with ebay product data using python, postgres and Power BI. I kept it as simple as it can be.

So this will be a four part project. This will help keep the article simple, straight to the point and somewhat independent. When I say independent I mean you can follow each article and it will completely form a project on its own.

The four parts are:

- Extracting data from ebay using python requests and beautiful soup

- Transforming the data using python pandas and numpy

- Loading the transformed data into postgres database

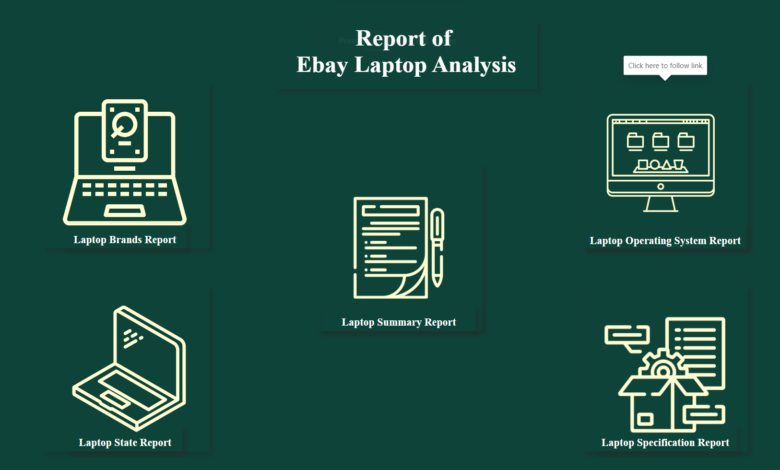

- Analysis and data storytelling using Power BI

Extracting data from ebay with easy to use requests and beautiful soup

The first part of an ETL pipeline is the data extraction, ETL stands for Extract Transform and Load.

It is a three-phase process where data is extracted from a single or multiple sources, transformed according to the problem statement, business requirement and business rules and loaded into an output data container which can be a database, data warehouse or a data lake.

Before you extract any data, it is important you know the data to extract and the problem you want to solve with this data. This brings us to the problem statement.

Problem Statement

The CEO of Global Sygnature, a company that sells new laptops in the city of Port Harcourt has gotten a lot of requests for fairly used quality laptops.

So he decided to go into this line of business to expand the services they render.

He has met Primedsoft, a business intelligence agency to help him extract data about laptops from ebay. The CEO said majority are looking for a UK used laptops because of their quality.

He would like to see metrics such as:

- Average Price of laptops

- Most expensive laptop listed

- Least expensive laptop listed

- Average prices of major brands

- The top most popular brands

- Most listed laptops by their OS

- Prices of laptops base on their current state

- Average price of laptops by drive type, disk size and RAM size.

- Any other insight that can be gotten from the data that will help in decision making.

Importing The Python Libraries and Modules

By supplying the metrics and the source data to extract, they have made it easy to know how to extract this data. So I will be using python’s requests module and BeautifulSoup4 library to extract the data from ebay.

The IDE I will be using is Jupyter Lab, please by all means use any IDE of your choosing.

At this point I believe you have foundational knowledge of python and you have installed the libraries below. The libraries and modules I will be importing for the data extraction are:

requests

BeautifulSoup

from time import sleep

randint

numpy

pandas

Requests is used to download the website. This time the ebay website we searched for.

BeautifulSoup is for extracting the desired data(text) from the website

The sleep class in time module is to get the code to sleep for sometime before scraping the next page. I would not need this if I am scraping just one page, but I need multiple pages in order to get enough data.

Randint class from random module is to randomly give a number of milliseconds or seconds the code will sleep before running again.

Numpy to help loop through the pages the number of times we need it to and Pandas to convert the data to dataframe and csv.

Inspecting The Web Pages

Let’s head over to ebay.co.uk to take a look at the structure of the website we want to search. Note that every website is different and will likely need a different approach when scraping.

This is where I believe that web scraping is as much art as it is science. You need to understand the structure of the web page(s) then find your own way of scraping it.

A careful look at the structure of the website search page for laptops, it can be seen that the search results are organized in cards (that is the name frontend developers call such shape).

At this point, if we can find a way to get hold of the html code behind the card, then we can easily loop through all the cards using python.

Let’s go deeper to see the code behind. Right click on the header, then click on “inspect”, this will open the code by the side as seen below.

So the image above shows the card being wrapped in a html tag called “div tag” and inside the tag is an attribute called class, this class helps in repeating a style. So with it, you can access all the cards using beautifulSoup.

I am not interested in the images as it is not part of the requirement analysis. So I opened up the div tag to drill through to what I need. And that is the card I need.

Now for each data you want to scrape, you right click on it and go to the inspect, this will take you to the tag holding the data and the attributes that can help you scrap it.

Repeat this for all the items you want to scrape.

Explaining The Script

Let’s take a look at the code that scraped the desired data and I will walk you through on what they do.

From the code I declared the headers which is one of the optional parameters needed by the requests library.

Next I declared empty lists of the items I need from each card on the web page. The items I need are:

Title of each card that describes the laptop

Current state of the laptop

Price of the laptop

The next is declaring the pages variable using numpy arange method. This method returns evenly spaced values within a given interval.

Taking a quick study of the pagination in the url I noticed that each page increases by one and there are about 47 pages.

So the method says, start from page 1, loop through 50 pages or less if not up to 50, do this 1 page at a time.

As you already must have known, when you have nested for loop, the inner loop runs first.

So having found all the tags and attributes holding the items I want, I used the “find()” method in BS4 which gets one item at a time.

This will run through all the cards in the page and return all the items and append them in their respective lists.

Then the outer for loop will then loop through each page to get the same sets of data for different laptops posted.

Soup variable is used to save the BS4 class, while acc_data is used to store the data gotten from the card using BS4 method “find_all()”. This method finds all the cards that have the same class.

Because this is looping through cards with the same attribute, it will likely get data that are just adverts, we will take care of this during the analysis and prepping with Power BI.

It is also important to note that for you can easily break into this industry with these stack, you will need to be creative. There is not one style fit all in web scraping

After running the loop I converted the list into a dataframe using pandas and saved it in a csv file called ebay_laptops. Below is part of the dataset.

Conclusion

I will stop here for the extraction phase of this project. It is already lengthy. In this beginner friendly etl project with python, postgres and power bi we have:

Seen how to easily use requests library to download the website

How to understand and navigate around the web page to find what we need

learnt the fundamental of extracting data using beautiful soup,

Seen how to approach problem statement

Convert list into dataframe and dataframe into csv file in one line of code.

You can see that at this point you have successfully done a web scraping project and acquired a basic web scraping skill using BS4.

Now join me to part two of this project where we will transform this data to look more like the possible solution for all the requirement analysis from Global Sygnature CEO.